Philosophy

The Model Context Protocol (MCP): architecture, security risks, and best practices

Content writer and editor

Updated

Nov 14, 2025

8 min

The Model Context Protocol (MCP) is rapidly becoming a foundational element in the architecture of advanced AI systems, especially for agentic AI applications that need to access external data sources, tools, and workflows dynamically. Developed and open-sourced in late 2024 by Anthropic, the goal of MCP is to solve the problem of AI isolation by providing a universal, open standard that connects large language models (LLMs) to the key business and development systems where relevant data resides.

However, this powerful abstraction layer also introduces significant new security risks by creating dynamic pathways between AI agents and core IT infrastructure. Therefore, securing the MCP is crucial for organizations seeking to leverage the power of AI-driven automation.

Understanding the MCP

The MCP is an open-source standard that facilitates secure, two-way communication between AI applications (the clients) and external systems (the servers). It functions much like a universal connector or a "USB-C port for AI," replacing the need for brittle, custom-built integrations for every new data source or tool.

From fragmentation to standardization

Before MCP, connecting an LLM to disparate systems (e.g., a Google Drive repository, a Slack channel, or a PostgreSQL database) required creating separate, ad-hoc API integrations for each. This fragmented approach made scalability and maintenance difficult. MCP addresses this challenge by providing a unified specification, allowing developers to build on a single, standardized protocol. This standardization dramatically reduces integration barriers, enabling AI models to maintain rich context across multiple interactions and perform context-aware actions on behalf of the user.

Core architecture: client-server model

MCP adheres to a straightforward client-server architecture:

MCP architecture diagram illustrating three different flows.

MCP host: This is the application or environment with which the user interacts (e.g., an AI-powered IDE, a personalized business chatbot, or a desktop app such as Claude Desktop). The host manages the user experience and runs the AI model.

MCP client: This is a component instantiated by the host application that manages the dedicated one-to-one connection and communication with an MCP server. It acts as the intermediary that translates the AI's intent into protocol-level requests for the server and relays the results back.

MCP server: This is an external service—often a local process or a remote API—that exposes specific capabilities (tools, resources, and prompts) to the AI application through the MCP protocol. Servers can be built to connect to file systems and databases, as well as cloud services and version control platforms like GitHub and GitLab, among other things.

The three primitives: tools, resources, and prompts

The core of MCP is the data layer, defined by three fundamental primitives that MCP servers can expose to clients:

Primitive | Description | Control mechanism | Examples of functionality |

Tools | Executable functions that the AI application can invoke to perform actions with defined inputs and outputs. | Model-controlled (the AI decides when and how to invoke them based on the user request). | Search for flights; send messages; modify a file; run a query on a database. |

Resources | Passive data sources that provide read-only contextual information. | Application-controlled (the client decides what information to retrieve and how to present it as context to the model). | Retrieve document content; access database schemas; read API documentation; fetch calendar entries. |

Prompts | Structured, reusable templates that guide or structure interactions with the LLM. They can reference available tools and resources. | User-controlled (requires explicit invocation, often via slash commands or dedicated buttons). | "/deliver-vulnerability-report" template; "Summarize my meetings" system prompt. |

Security risks of the MCP

The integration capability that makes MCP so powerful is also its primary security concern. By creating direct, dynamic pathways between AI models (the new control plane) and sensitive enterprise systems, MCP effectively removes traditional security boundaries that rely on system isolation. A single compromised MCP server can lead to a breach of multiple high-value systems.

Supply chain and integrity risks

Because anyone can develop and distribute MCP servers, and many operate with elevated privileges, they become high-value targets for supply chain attacks.

Malicious or poisoned servers: Attackers can distribute fake servers through public repositories, such as GitHub or PyPI, with names that are deceptively similar to legitimate ones (i.e., name collision or typosquatting). Once installed, these servers can contain backdoors, exfiltrate data, or execute arbitrary code with the same privileges as the user or host application.

Installer/Code spoofing: Installing a local MCP server often involves running scripts, which represent an opportunity for attackers to inject malware or tampered installers.

Unvetted dependencies: MCP servers are essentially software packages that depend on upstream libraries. A compromise in one of these dependencies can introduce vulnerabilities into the server's codebase (code injection or backdoors).

Authorization and authentication flaws

MCP uses OAuth conventions for authorization, but implementation errors can lead to critical identity and access vulnerabilities.

Confused deputy attacks (token hijacking): This is a specific OAuth flow vulnerability that affects MCP proxy servers (servers that act as a gateway to a third-party API). An attacker can exploit the combination of static client IDs and dynamic client registration to trick a user into granting an authorization code that is then redirected to the attacker's server, allowing them to steal access tokens and impersonate the user.

Token passthrough (anti-pattern): This is an explicitly prohibited practice where an MCP server accepts and passes an access token from the MCP client directly to the downstream API without validating that the token was issued specifically for the MCP server. This bypasses essential security controls, such as rate limiting and audience validation, and hinders auditing.

Over-privileged scopes and credential leakage: MCP servers often request broad permission scopes (e.g., full read/write access to Gmail and Google Drive) for convenience. This centralization of multiple highly sensitive tokens (e.g., API keys, OAuth tokens) means that a compromise of a single server can result in a massive blast radius across the connected ecosystem and the leakage of confidential information.

Tool and agent manipulation attacks

These attacks leverage the AI's dependence on primitives (tools, resources, and prompts) to achieve malicious ends, often by exploiting the agent's internal logic.

Prompt injection and tool poisoning: Malicious instructions can be hidden in user input, in the tool's description/docstring, or in the content of a resource. The LLM then interprets these hidden commands as legitimate instructions, leading it to perform unauthorized actions, such as:

Exfiltrating sensitive data (e.g., sending SSH keys via a legitimate "send_email" tool)

Modifying database records

Writing insecure code

Context leakage: Since the AI agent shares parts of the conversation, state, and resource data with connected tools to maintain context, an untrustworthy or malicious server could access sensitive information intended for another tool or only for the user.

Cross-server shadowing attacks: In an environment with multiple connected MCP servers, a malicious server may register tools with names that are identical or very similar to those of tools on a legitimate and trusted server (e.g., two different "delete" commands). The AI model may mistakenly invoke the malicious tool, resulting in unauthorized actions and even data loss.

Local execution and isolation risks (local MCP servers)

Local MCP servers, which run on the user's machine (often with the same privileges as the user or the client application), introduce significant system-level risks.

Arbitrary code execution and sandbox escape: If an attacker can inject malicious instructions into a local server's command, they can execute any command on the host machine. If the server is not properly sandboxed (e.g., in a container or a restricted environment), a successful compromise can lead to a sandbox escape and lateral movement across the host machine and even the internal network.

DNS rebinding: An attacker can access an insecure local server running on "localhost" via compromised JavaScript, leading to data exfiltration or unauthorized actions.

Best practices for MCP security

Securing MCP requires a shift from traditional network-centric defenses to an identity- and context-aware control plane approach. The following best practices, drawn from community standards and security research, are crucial for mitigating MCP risks.

Server vetting and supply chain security

Treat every MCP server and its dependencies as privileged software that can access your most critical systems.

Establish a trust registry: Maintain an internal inventory of approved MCP servers and their vetted versions. Only allow installation from this pre-approved list.

Vetting and security checks: Implement rigorous security reviews, static analysis (SAST), and software composition analysis (SCA) for all server code and dependencies prior to deployment.

Cryptographic verification: Require the use of signed packages and integrity checks (checksums/signatures) to ensure that the code being executed is the official, untampered version.

Pin versions: Configure MCP clients to pin tool versions to prevent unexpected behavior changes or security regressions from automatic updates. Track the upstream server for patches and vet them before deployment.

Authentication and least privilege access

Enforce strict controls over who can connect to a server and what actions the model can take on their behalf.

Strict token scoping: Adhere to the principle of least privilege for all API keys and OAuth tokens used by MCP servers. Issue short-lived, minimally scoped tokens. Each server should only have the permissions necessary for its intended functionality (e.g., a file-reading server should not have database write permissions).

Forbid token passthrough: Your MCP servers must not accept or pass tokens that were not explicitly issued for their own services (validating the token's audience).

Mitigate confused deputy attacks: Your MCP proxy servers must implement per-client consent and appropriate security controls:

Keep a record of approved "client_id" values per user

Implement CSRF (cross-site request forgery) protection on the MCP-level consent page

Prevent clickjacking by disallowing iframing

Avoid session-based auth: Your MCP servers must not use session IDs for authentication. All inbound requests that implement authorization must be verified against a valid token linked to the user.

Input validation and tool guardrails

Implement strong barriers to prevent model manipulation and protect the host system.

Rigorously validate inputs: All parameters passed to MCP tools must be validated against their schema (allowed characters, length, format). If a tool executes system commands, use secure APIs or parameterized commands instead of shell concatenation to prevent command injection.

Sanitize outputs and descriptions: Sanitize tool descriptions and outputs before feeding them back into the LLM's context. Strip or encode any markup or complex characters that the AI agent might interpret as malicious instructions.

Isolate context: Implement context isolation so that each server/tool only receives the minimum information necessary for its operation, preventing sensitive data from leaking to an untrusted component.

Human-in-the-loop (consent): Require explicit user confirmation for any high-risk or destructive action (e.g., executing a command, modifying data, or sending an external communication). The client user interface should clearly display the tool, action, and parameters before requesting approval.

Environment and operational security

Ensure the infrastructure running your MCP components is hardened and monitored.

Sandboxing and isolation: Run all your local MCP servers and their tools in a sandboxed environment with minimal privileges by default. Use containers, chroot, or operating system-level sandboxes to contain any damage in case of compromise.

Use mTLS for transport security: Implement mutual TLS (mTLS) for all communications between the MCP client and server (if running remotely) to ensure that both parties are authenticated and the traffic is encrypted, preventing eavesdropping or impersonation.

"Fail closed" policy: If an MCP server or a required dependency, such as an identity service, becomes unavailable, the system must "fail closed" (stop operating) rather than "fail open" (continue operating with security controls disabled).

Comprehensive logging and monitoring: Enable detailed logging of every interaction with your MCP—which tool was invoked, by whom, with what parameters, and what the result was. Integrate these logs with a SIEM (security information and event management) system to identify suspicious patterns, such as multiple failed attempts to select tools or excessive data retrieval.

Example of MCP implementation in Fluid Attacks

At Fluid Attacks, the Model Context Protocol is fundamental to the Interacts component, which functions as a powerful AI agent offered to customers for vulnerability management and security analysis. Interacts is an MCP server built using Pydantic AI (a framework that emphasizes predictable and validated input/output) to provide secure and structured access to data within the Fluid Attacks platform.

Architecture overview

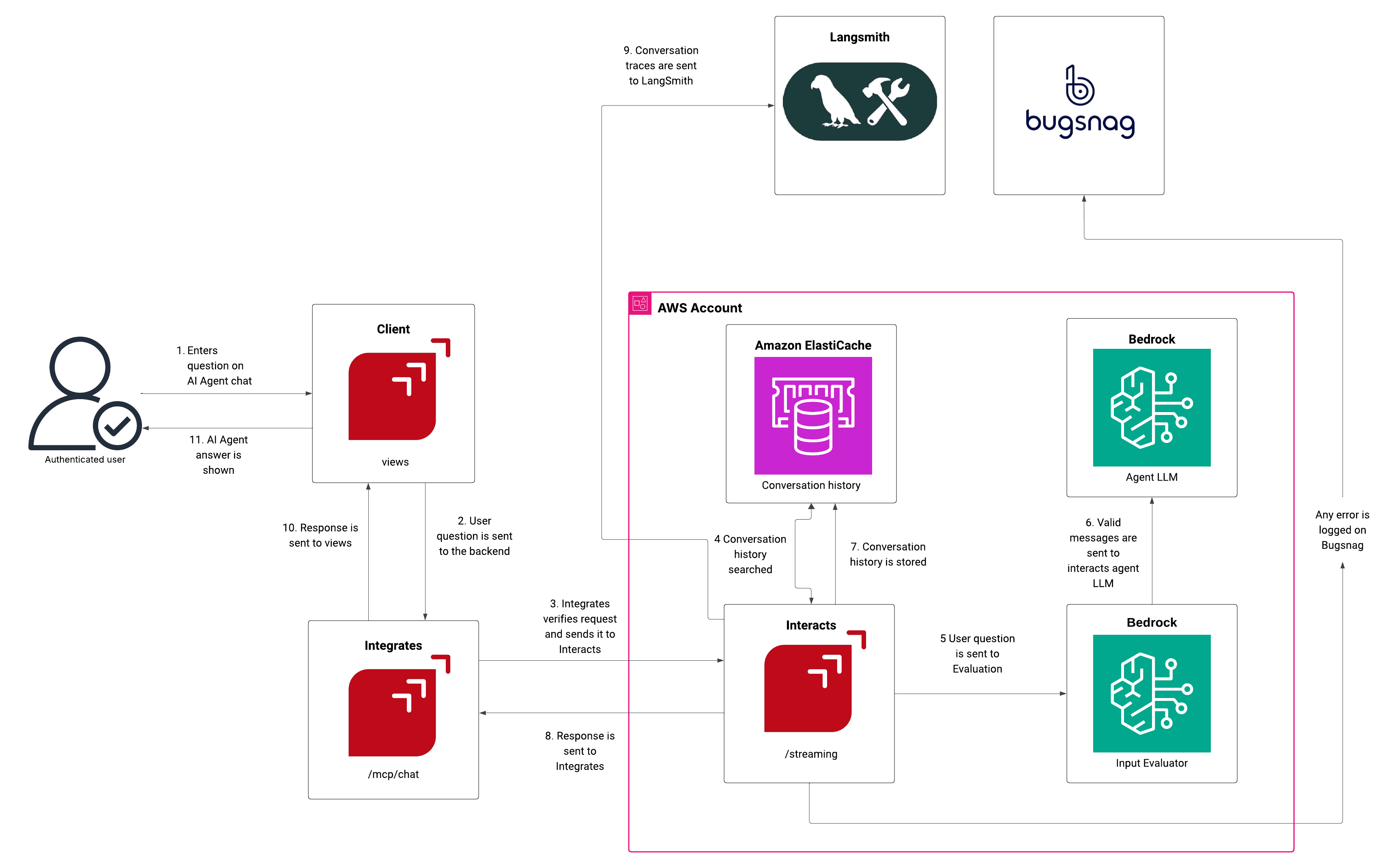

The provided architecture diagram illustrates how Fluid Attacks leverages MCP to integrate AI capabilities securely:

Client request: An authenticated user enters a question in the Client ("views" component) AI Agent chat.

Request routing: The request goes to the Integrates backend (the vulnerability management platform), which verifies authentication and forwards the request to the Interacts (MCP server) component at the "/streaming" endpoint.

Context and AI processing:

Interacts queries Amazon ElastiCache for relevant conversation history to maintain continuity.

The question is sent for evaluation to a Bedrock (Input Evaluator) component and the to another (Agent LLM), which generates the actual AI response based on the user's question and conversation history.

Security and logging: Conversation traces are sent to LangSmith for monitoring and debugging. Any errors are logged to BugSnag.

Response delivery: The validated response is sent back from Interacts to Integrates and finally displayed to the user via the Client (views).

History update: The updated conversation history is stored back in Amazon ElastiCache.

Key security and capability features

The Fluid Attacks MCP server is designed with security and minimal scope in mind:

Feature | Description and security implications |

Pydantic AI | Utilizes a Python-first, type-safe agent framework, which promotes predictable and validated input/output, helping to reduce the risk of unexpected behavior or data-type related injection flaws. |

Amazon Bedrock Guardrails | A multi-layered defense that proactively screens both user input and model output for unsafe content, prompt attacks, and policy violations, providing a crucial check before tool invocation. |

API token required | All interactions require a valid API token, enforcing strong authentication and binding the AI agent's actions to a specific, authenticated user identity. |

Read-only capabilities | The server has only read capabilities (e.g., retrieving vulnerability, organization, and analytics data) and cannot modify or create anything in the Fluid Attacks platform. This enforces the least privilege principle and dramatically limits the blast radius of any compromise. |

Supported functions | Tools are strictly limited to core functions like: vulnerability management (fetch and analyze), organization insights, analytics, and GraphQL integration (query execution). |

By implementing these security measures, in addition to continuously testing all its software with SAST, SCA, secure code review, and other security testing techniques, Fluid Attacks minimizes the risks inherent in the MCP framework while maximizing the usefulness of an agentic AI assistant for the benefit of its clients.

Conclusion

The Model Context Protocol represents a pivotal evolution in AI, transforming isolated LLMs into dynamic, context-aware agents capable of operating across complex enterprise environments. While this transition accelerates automation and workflow efficiency, it simultaneously elevates the need for a proactive, identity-centric security model that moves beyond traditional perimeter defenses. Adopting the recommended best practices—from strict supply chain vetting to human-in-the-loop controls—is paramount for securing the agentic AI control plane and realizing the full, safe potential of the MCP.

If you need help with security assessment and risk mitigation in your AI-powered environments and products, please don't hesitate to contact us.

Get started with Fluid Attacks' AI security solution right now

Other posts